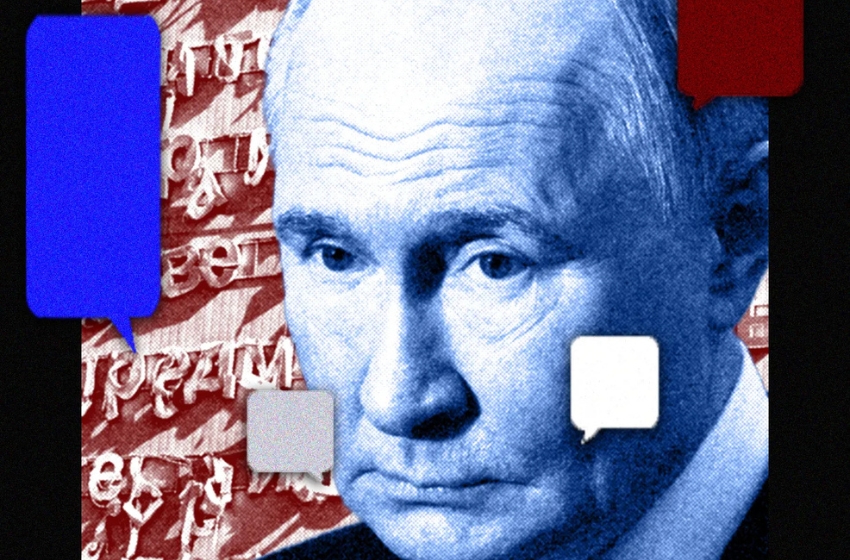

Popular chatbots such as OpenAI’s ChatGPT, Google’s Gemini, DeepSeek, and xAI’s Grok often cite sources linked to Russian state media, agencies, or pro-Kremlin disinformation networks when responding to questions about Russia’s war against Ukraine, according to a study by the Institute for Strategic Dialogue (ISD), as reported by Wired.

In the experiment, researchers asked chatbots 300 questions on various topics — ranging from perceptions of NATO and peace negotiations to war crimes, refugees, and mobilization in Ukraine. The questions included neutral, biased, and overtly manipulative prompts. Responses were requested in multiple languages (English, Spanish, French, German, Italian).

The results were concerning: about 18% of chatbot answers referenced sources directly connected to Russian state or pro-Kremlin media. For questions with a more explicit manipulative intent (“malicious” prompts), the share of such references reached roughly 25%, while neutral questions generated slightly over 10%.

Researchers noted that propagandistic references were more likely on topics with a lack of reliable public information — so-called “data voids.” Russia and pro-Kremlin networks actively exploit these gaps to inject their narratives and influence AI algorithms.

Sources cited by the chatbots included RT (formerly “Russia Today”), Sputnik, the Strategic Culture Foundation, and other media and networks sanctioned by the EU for involvement in disinformation campaigns.

OpenAI confirmed that it is taking steps to prevent ChatGPT from spreading false or misleading content, including material linked to state propaganda actors. However, a company representative noted that part of the issue stems from internet search results rather than direct manipulation of the model.

Experts emphasize that as chatbots become reference points and sources of information for millions of users — for example, ChatGPT has around 120.4 million monthly active users in the EU as of September 2025, according to OpenAI — their vulnerability to manipulation is significant. They warn that such cases undermine trust and can amplify foreign influence campaigns.

The report also stresses the growing importance of regulating chatbots and ensuring greater transparency of information sources. Developers should agree on which sources should be excluded or accompanied by explanatory context if linked to foreign disinformation actors.